Depth Estimation: Difference between revisions

No edit summary |

|||

| Line 32: | Line 32: | ||

* [https://openaccess.thecvf.com/content_ECCV_2018/html/Sameh_Khamis_StereoNet_Guided_Hierarchical_ECCV_2018_paper.html StereoNet (ECCV 2018)] ([[StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction |My Summary]]) is a method by Google's Augmented Perception team. | * [https://openaccess.thecvf.com/content_ECCV_2018/html/Sameh_Khamis_StereoNet_Guided_Hierarchical_ECCV_2018_paper.html StereoNet (ECCV 2018)] ([[StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction |My Summary]]) is a method by Google's Augmented Perception team. | ||

* [http://visual.cs.ucl.ac.uk/pubs/casual3d/ Casual 3D photography (SIGGRAPH ASIA 2017)] includes a method for refining cost volumes and a system for synthesizing views from a few dozen photos] | * [http://visual.cs.ucl.ac.uk/pubs/casual3d/ Casual 3D photography (SIGGRAPH ASIA 2017)] includes a method for refining cost volumes and a system for synthesizing views from a few dozen photos] | ||

==Depth from Motion== | ==Depth from Motion== | ||

Latest revision as of 14:46, 31 August 2020

Depth Estimation

Goal: Generate an depth values from one or two images.

Background

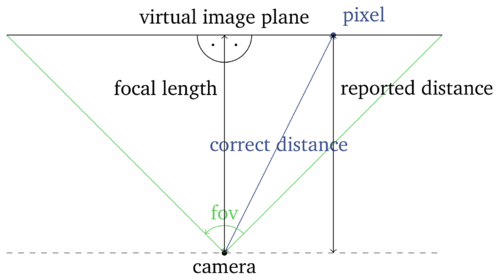

Z-depth vs Euclidean depth

For standard (rectilinear) images, people typically use z-depth. This is the depth to the camera projected along the z-axis.

The depth to the camera is called Euclidean depth.

You can convert between z-depth and euclidean depth by multiplying or dividing by the cosine of the angle from each pixel to the center of the image.

This angle is calculated by first computing the 2D distance from the pixel to the center of the image and converting this distance to an angle using the known field of view.

Depth vs. Disparity

For stereo methods, people usually estimate pixel disparity rather than depth.

That is, determining how far a pixel moves along the epipolar line between two images.

Usually, this involves first rectifying an images using RANSAC or similar. Then a cost volume can be built. Then argmin is applied to the cost volume to find the best disparity estimate.

Disparity is related to depth by the following formula: \[disparity = baseline * focal / depth\]

- \(focal\) is the focal length in pixels. This is the distance to your image in pixels.

- This can be calculated as

(height/2) * cot(fov_h/2). - You can't imagine focal length as a depth since if the image is closer then the pixel sizes will be smaller and thus the distance to the image stays the same.

- In the formula, this term acts as a correction factor for the resolution of the disparity.

- This can be calculated as

- \(baseline\) is the distance between the camera positions. This should be in the same units as your depth.

Stereo Depth

Typically people use cost-volume to estimate depth from a stereo camera setup.

- StereoNet (ECCV 2018) (My Summary) is a method by Google's Augmented Perception team.

- Casual 3D photography (SIGGRAPH ASIA 2017) includes a method for refining cost volumes and a system for synthesizing views from a few dozen photos]

Depth from Motion

Depth is generated in real-time based on motion of the camera