\(

\newcommand{\P}[]{\unicode{xB6}}

\newcommand{\AA}[]{\unicode{x212B}}

\newcommand{\empty}[]{\emptyset}

\newcommand{\O}[]{\emptyset}

\newcommand{\Alpha}[]{Α}

\newcommand{\Beta}[]{Β}

\newcommand{\Epsilon}[]{Ε}

\newcommand{\Iota}[]{Ι}

\newcommand{\Kappa}[]{Κ}

\newcommand{\Rho}[]{Ρ}

\newcommand{\Tau}[]{Τ}

\newcommand{\Zeta}[]{Ζ}

\newcommand{\Mu}[]{\unicode{x039C}}

\newcommand{\Chi}[]{Χ}

\newcommand{\Eta}[]{\unicode{x0397}}

\newcommand{\Nu}[]{\unicode{x039D}}

\newcommand{\Omicron}[]{\unicode{x039F}}

\DeclareMathOperator{\sgn}{sgn}

\def\oiint{\mathop{\vcenter{\mathchoice{\huge\unicode{x222F}\,}{\unicode{x222F}}{\unicode{x222F}}{\unicode{x222F}}}\,}\nolimits}

\def\oiiint{\mathop{\vcenter{\mathchoice{\huge\unicode{x2230}\,}{\unicode{x2230}}{\unicode{x2230}}{\unicode{x2230}}}\,}\nolimits}

\)

SynSin: End-to-end View Synthesis from a Single Image (CVPR 2020)

Authors: Olivia Wiles, Georgia Gkioxari, Richard Szeliski, Justin Johnson

Affiliations: University of Oxford, Facebook AI Research, Facebook, University of Michigan

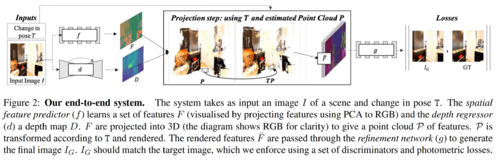

Method Figure 2 from SynSin Paper First a depth map and a set of features are generated for each pixel using depth network \(d\) and feature network \(f\).

The depths are used to create a 3D point cloud of features \(P\).

Features are repositioned using the transformation matrix T.

Repositioned features are rendered using a neural point cloud renderer.

Rendered features are passed through a refinement network \(g\).

Architecture Feature Network Depth Network Neural Point Cloud Rendering Refinement Network Evaluation References