SynSin: End-to-end View Synthesis from a Single Image: Difference between revisions

No edit summary |

No edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

SynSin: End-to-end View Synthesis from a Single Image (CVPR 2020) | SynSin: End-to-end View Synthesis from a Single Image (CVPR 2020) | ||

Authors: Olivia Wiles, Georgia Gkioxari, Richard Szeliski, Justin Johnson | Authors: Olivia Wiles, Georgia Gkioxari, Richard Szeliski, Justin Johnson | ||

Affiliations: University of Oxford, Facebook AI Research, Facebook, University of Michigan | Affiliations: University of Oxford, Facebook AI Research, Facebook, University of Michigan | ||

| Line 33: | Line 33: | ||

==References== | ==References== | ||

[[Category:Papers]] | |||

Latest revision as of 16:37, 31 January 2022

SynSin: End-to-end View Synthesis from a Single Image (CVPR 2020)

Authors: Olivia Wiles, Georgia Gkioxari, Richard Szeliski, Justin Johnson

Affiliations: University of Oxford, Facebook AI Research, Facebook, University of Michigan

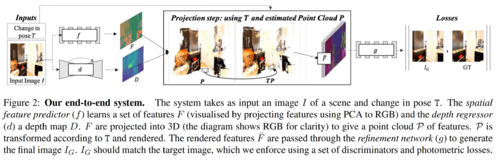

Method

- First a depth map and a set of features are generated for each pixel using depth network \(d\) and feature network \(f\).

- The depths are used to create a 3D point cloud of features \(P\).

- Features are repositioned using the transformation matrix T.

- Repositioned features are rendered using a neural point cloud renderer.

- Rendered features are passed through a refinement network \(g\).