Transformer (machine learning model): Difference between revisions

| Line 34: | Line 34: | ||

==Resources== | ==Resources== | ||

;Guides and explanations | ;Guides and explanations | ||

* [https://jalammar.github.io/illustrated-transformer/ The Illustrated Transformer] | |||

* [https://nlp.seas.harvard.edu/2018/04/03/attention.html The Annotated Transformer] | * [https://nlp.seas.harvard.edu/2018/04/03/attention.html The Annotated Transformer] | ||

* [https://www.youtube.com/watch?v=iDulhoQ2pro Youtube Video by Yannic Kilcher] | * [https://www.youtube.com/watch?v=iDulhoQ2pro Youtube Video by Yannic Kilcher] | ||

==References== | ==References== | ||

Revision as of 15:03, 23 November 2020

Attention is all you need paper

A neural network architecture by Google.

It is currently the best at NLP tasks and has mostly replaced RNNs for these tasks.

Architecture

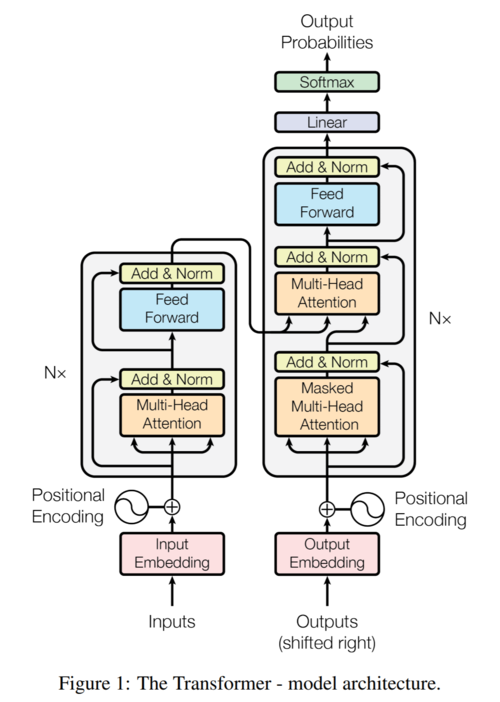

The Transformer uses an encoder-decoder architecture.

Both the encoder and decoder are comprised of multiple identical layers which have

attention and feedforward sublayers.

Attention

Attention is the main contribution of the transformer architecture.

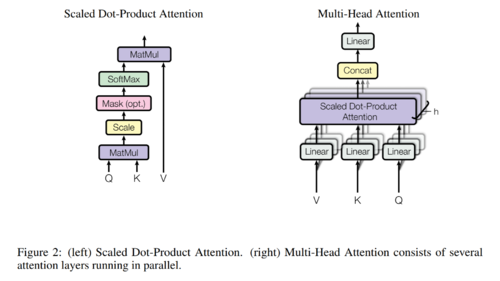

The attention block outputs a weighted average of values in a dictionary of key-value pairs.

In the image above:

- \(\displaystyle Q\) represents queries (each query is a vector)

- \(\displaystyle K\) represents keys

- \(\displaystyle V\) represents values

The attention block can be represented as the following equation:

- \(\displaystyle \operatorname{SoftMax}(\frac{QK^T}{\sqrt{d_k}})V\)

Encoder

The receives as input the input embedding added to a positional encoding.

The encoder is comprised of N=6 layers, each with 2 sublayers.

Each layer contains a multi-headed attention sublayer followed by a feed-forward sublayer.

Both sublayers are residual blocks.

Decoder

Code

See Hugging Face

Resources

- Guides and explanations