StyleGAN: Difference between revisions

Created page with "StyleGAN CVPR 2019<br> [https://arxiv.org/abs/1812.04948 2018 Paper (arxiv)] [http://openaccess.thecvf.com/content_CVPR_2019/html/Karras_A_Style-Based_Generator_Architecture_f..." |

|||

| (7 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

==Architecture== | ==Architecture== | ||

[[File:StyleGAN architecture.PNG | thumb | 300px | Architecture of StyleGAN from their paper]] | [[File:StyleGAN architecture.PNG | thumb | 300px | Architecture of StyleGAN from their paper]] | ||

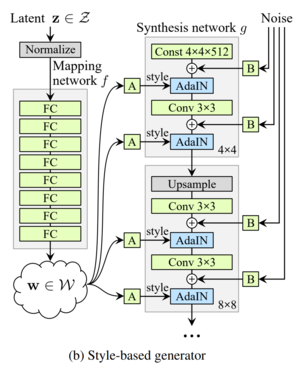

StyleGAN consists of a mapping network <math>f</math> and a synthesis network <math>g</math>. | |||

===Mapping Network=== | |||

The goal of the mapping network is to generate a latent vector <math>w</math>.<br> | |||

This latent <math>w</math> is used by the synthesis network as input the each AdaIN block.<br> | |||

Before each AdaIN block, a learned affine transformation converts <math>w</math> into a "style" in the form of mean and standard deviation.<br> | |||

The mapping network <math>f</math> consists of 8 fully connected layers with leaky relu activations at each layer.<br> | |||

The input and output of this vector is an array of size 512.<br> | |||

===Synthesis Network=== | |||

The synthesis network is based on progressive growing (ProGAN). | |||

It consists of 9 convolution blocks, one for each resolution from <math>4^2</math> to <math>1024^2</math>.<br> | |||

Each block consists of upsample, 3x3 convolution, AdaIN, 3x3 convolution, AdaIN. | |||

After each convolution layer, a gaussian noise with learned variance (block B in the figure) is added to the feature maps. | |||

====Adaptive Instance Normalization==== | |||

Each AdaIN block takes as input the latent style <math>w</math> and the feature map <math>x</math>.<br> | |||

An affine layer (fully connected with no activation, block A in the figure) converts the style to a mean <math>y_{b,i}</math> and standard deviation <math>y_{s,i}</math>.<br> | |||

Then the feature map is shifted and scaled to have this mean and standard deviation.<br> | |||

* <math>\operatorname{AdaIN}(\mathbf{x}_i, \mathbf{y}) = \mathbf{y}_{s,i}\frac{\mathbf{x}_i - \mu(\mathbf{x}_i)}{\sigma(\mathbf{x}_i)} + \mathbf{y}_{b,i}</math> | |||

==Results== | ==Results== | ||

| Line 16: | Line 37: | ||

==Related== | ==Related== | ||

* [https://arxiv.org/abs/1904.03189 Image2StyleGAN] | * [https://arxiv.org/abs/1904.03189 Image2StyleGAN] | ||

==Resources== | |||

* [https://machinelearningmastery.com/introduction-to-style-generative-adversarial-network-stylegan/ https://machinelearningmastery.com/introduction-to-style-generative-adversarial-network-stylegan/] | |||

* [https://towardsdatascience.com/explained-a-style-based-generator-architecture-for-gans-generating-and-tuning-realistic-6cb2be0f431 https://towardsdatascience.com/explained-a-style-based-generator-architecture-for-gans-generating-and-tuning-realistic-6cb2be0f431] | |||

Latest revision as of 19:35, 4 March 2020

StyleGAN CVPR 2019

2018 Paper (arxiv)

CVPR 2019 Open Access

StyleGAN Github

StyleGAN2 Paper

StyleGAN2 Github

An architecture by Nvidia which allows controlling the "style" of the GAN output by applying adaptive instance normalization at different layers of the network.

StyleGAN2 improves upon this by...

Architecture

StyleGAN consists of a mapping network \(\displaystyle f\) and a synthesis network \(\displaystyle g\).

Mapping Network

The goal of the mapping network is to generate a latent vector \(\displaystyle w\).

This latent \(\displaystyle w\) is used by the synthesis network as input the each AdaIN block.

Before each AdaIN block, a learned affine transformation converts \(\displaystyle w\) into a "style" in the form of mean and standard deviation.

The mapping network \(\displaystyle f\) consists of 8 fully connected layers with leaky relu activations at each layer.

The input and output of this vector is an array of size 512.

Synthesis Network

The synthesis network is based on progressive growing (ProGAN).

It consists of 9 convolution blocks, one for each resolution from \(\displaystyle 4^2\) to \(\displaystyle 1024^2\).

Each block consists of upsample, 3x3 convolution, AdaIN, 3x3 convolution, AdaIN.

After each convolution layer, a gaussian noise with learned variance (block B in the figure) is added to the feature maps.

Adaptive Instance Normalization

Each AdaIN block takes as input the latent style \(\displaystyle w\) and the feature map \(\displaystyle x\).

An affine layer (fully connected with no activation, block A in the figure) converts the style to a mean \(\displaystyle y_{b,i}\) and standard deviation \(\displaystyle y_{s,i}\).

Then the feature map is shifted and scaled to have this mean and standard deviation.

- \(\displaystyle \operatorname{AdaIN}(\mathbf{x}_i, \mathbf{y}) = \mathbf{y}_{s,i}\frac{\mathbf{x}_i - \mu(\mathbf{x}_i)}{\sigma(\mathbf{x}_i)} + \mathbf{y}_{b,i}\)