SinGAN: Learning a Generative Model from a Single Natural Image: Difference between revisions

No edit summary |

|||

| Line 5: | Line 5: | ||

[https://github.com/tamarott/SinGAN Github Official PyTorch Implementation]<br> | [https://github.com/tamarott/SinGAN Github Official PyTorch Implementation]<br> | ||

SinGAN: Learning a Generative Model from a Single Natural Image<br> | SinGAN: Learning a Generative Model from a Single Natural Image<br> | ||

Authors: [https://stamarot.webgr.technion.ac.il/ Tamar Rott Shaham] (Technion), Tali Dekel (Google Research), Tomer Michaeli (Technion)<br> | Authors: | ||

[https://stamarot.webgr.technion.ac.il/ Tamar Rott Shaham] ([https://www.technion.ac.il/ Technion]), | |||

[http://people.csail.mit.edu/talidekel/ Tali Dekel] (Google Research), | |||

[http://webee.technion.ac.il/people/tomermic/ Tomer Michaeli] (Technion)<br> | |||

[[File: Singan_teaser.PNG | 800x400px]] | [[File: Singan_teaser.PNG | 800x400px]] | ||

Revision as of 12:50, 12 November 2019

SinGAN

Paper

Supplementary Material

Website

Github Official PyTorch Implementation

SinGAN: Learning a Generative Model from a Single Natural Image

Authors:

Tamar Rott Shaham (Technion),

Tali Dekel (Google Research),

Tomer Michaeli (Technion)

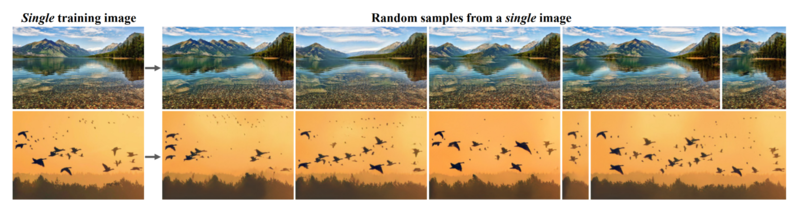

Basic Idea

Bootstrap patches of the original image and build GANs which can add fine details to blurry patches at different path sizes.

- Start by building a GAN to generate low-resolution versions of the original image

- Then upscale the image and build a GAN to add details to patches of your upscaled image

- Fix the parameters of the previous GAN. Upscale the outputs and repeat.

Architecture

They build \(\displaystyle N\) GANs.

Each GAN \(\displaystyle G_n\) adds details to patches of the image produced by GAN \(\displaystyle G_{n+1}\) below it.

The final GAN \(\displaystyle G_0\) adds only fine details.

Generator

The use N generators.

Each generator consists of 5 convolutional blocks:

Conv(</math>3 \times 3</math>)-BatchNorm-LeakyReLU.

They use 32 kernels per block at the coarsest scale and increase \(\displaystyle 2 \times\) every 4 scales.

Discriminator

The architecture is the same as the generator.

The patch size is \(\displaystyle 11 \times 11\)

Training and Loss Function

\(\displaystyle \min_{G_n} \max_{D_n} \mathcal{L}_{adv}(G_n, D_n) + \alpha \mathcal{L}_{rec}(G_n)\)

They use a combination of the standard GAN adversarial loss and a reconstruction loss.

Adversarial Loss

They use the WGAN-GP loss.

This drops the log from the traditional cross-entropy loss.

\(\displaystyle \min_{G_n}\max_{D_n}L_{adv}(G_n, D_n)+\alpha L_{rec}(G_n)\)

The final loss is the average over all the patches.

# When training the discriminator

netD.zero_grad()

output = netD(real).to(opt.device)

#D_real_map = output.detach()

errD_real = -output.mean()#-a

errD_real.backward(retain_graph=True)

#... Make noise and prev ...

fake = netG(noise.detach(),prev)

output = netD(fake.detach())

errD_fake = output.mean()

errD_fake.backward(retain_graph=True)

# When training the generator

output = netD(fake)

errG = -output.mean()

errG.backward(retain_graph=True)

Reconstruction Loss

\(\displaystyle \mathcal{L}_{rec} = \Vert G_n(0,(\bar{x}^{rec}_{n+1}\uparrow^r) - x_n \Vert ^2\)

The reconstruction loss ensures that the original image can be built by the GAN.

Rather than inputting noise to the generators, they input

\(\displaystyle \{z_N^{rec}, z_{N-1}^{rec}, ..., z_0^{rec}\} = \{z^*, 0, ..., 0\}\)

where the initial noise \(\displaystyle z^*\) is drawn once and then fixed during the rest of the training.

The standard deviation \(\displaystyle \sigma_n\) of the noise \(\displaystyle z_n\) is proportional to the root mean squared error (RMSE) between the reconstructed patch and the original patch.

loss = nn.MSELoss()

Z_opt = opt.noise_amp*z_opt+z_prev

rec_loss = alpha*loss(netG(Z_opt.detach(),z_prev),real)

rec_loss.backward(retain_graph=True)

Evaluation

They evaluate their method using an Amazon Mechanical Turk (AMT) user study and using Single Image Frechet Inception Distance

Amazon Mechanical Turk Study

Frechet Inception Distance

Results

Below are images of their results from their paper and website.

Applications

The following are applications they identify.

The basic idea for each of these applications is to start at an intermediate layer rather than the bottom layer.

While the bottom layer is a purely unconditional GAN, the intermediate generators are more akin to conditional GANs.

Super-Resolution

Paint-to-Image

Harmonization

Editing

Single Image Animation

Repo

The official repo for SinGAN can be found on their Github Repo