Convolutional neural network

Convolutional Neural Network

Primarily used for image tasks such as computer vision or image generation,

though they can be used anywhere you have a rectangular grid with spatial relationship among your data.

Motivation

Convolutional neural networks leverage the following properties of images:

- Stationarity or shift-invariance - objects in an image should be recognized regardless of their position

- Locality or local-connectivity - nearby pixels are more relevant than distant pixels

- Compositionality - objects in images have a multi-resolution structure.

Convolutions

Pytorch Convolution Layers

Types of convolutions animations

Here, we will explain 2d convolutions.

Suppose we have the following input image:

1 2 3 4 5 6 7 8 2 3 2 5 9 9 5 4 8 8 2 1

and the following 3x3 kernel:

1 1 1 2 1 2 3 2 1

For each possible position of the 3x3 kernel over the input image,

we perform an element-wise multiplication (\(\displaystyle \odot\)) and sum over all entries to get a single value.

Placing the kernel in the first position would yield:

\(\displaystyle

\begin{bmatrix}

1 & 2 & 3\\

6 & 7 & 8\\

2 & 5 & 9

\end{bmatrix}

\odot

\begin{bmatrix}

1 & 1 & 1\\

2 & 1 & 2\\

3 & 2 & 1

\end{bmatrix}

=

\begin{bmatrix}

1 & 2 & 3\\

12 & 7 & 16\\

6 & 10 & 9

\end{bmatrix}

\)

Summing up all the elements gives us \(\displaystyle 66\) which would go in the first index of the output.

Stride

How much the kernel moves along. Typically 1 or 2.

Padding

Convolutional layers yield an output smaller than the input size. We can use padding to increase the input size.

- Types of padding

- Zero

- Mirror

Dilation

Space between pixels in the kernel

A dilation of 1 will apply a 3x3 kernel over a 5x5 region. This would be equivalent to a 5x5 kernel with odd index weights (\(\displaystyle i \% 2 == 1\)) set to 0.

Groups

Other Types of Convolutions

Transpose Convolution

See Medium Post

Instead of your 3x3 kernel taking 9 values as input and returning 1 value (</math>\sum_i \sum_j w_{ij} * i_{i+x,j+y}</math>), the kernel now takes 1 value and returns 9 (</math>w_{ij} * i_{x,y}</math>).

Gated Convolution

See Gated Convolution (ICCV 2019)

Given an image, we have two convolution layers \(\displaystyle k_{feature}\) and \(\displaystyle k_{gate}\).

The output is \(\displaystyle O = \phi(k_{feature}(I)) \odot \sigma(k_{gate}(I))\)

Pooling

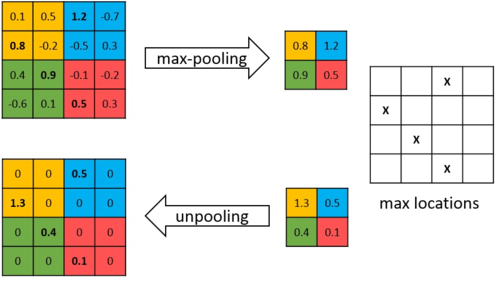

Unpooling

During max pooling, remember the indices where you pulled from in "switch variables".

Then when unpooling, save the max value into those indices. Other indices get values of 0.